GlusterFS has been a growing distributed filesystem, and is now a part of Red Hat Storage Server. GlusterFS, also with Ceph (not covered in this article), have created a software based filesystem free of metadata. By using a hash algorithm distributed to the clients, this has removed the bottleneck of searching metadata for every file that we are attempting to read. This also allows for easy replication, even to nodes outside of the current infrastructure. As GlusterFS runs in user space, there is no need to worry about kernel updates or changes like older distributed filesystems.

With GlusterFS being open source, speeds are as fast as or faster than most enterprise solutions, there is substantial ease of use, and benefits include a cloud-based storage approach that runs on commodity hardware. This makes the solution appealing to startups, non-profits or those running a mostly cloud-based infrastructure. Another bonus is that files are stored in EXT3/4. There is no need for migrations to new versions of Gluster. You can simply strip away GlusterFS without the need to convert filesystem’s if Gluster is not working for you.

Below is a quick tutorial on a small, 2-node GlusterFS filesystem to provide redundancy for two load balanced Apache servers. We will be running Ubuntu Server 14.0.3. This will act as a mirrored RAID NAS device.

Clients: websrv01, websrv02

GlusterFS: Gluster01, Gluster02

We are going to install our Gluster PPA (personal package archive) on all 4 of our Linux servers, which will allow us to pull down our packages.

sudo apt-get install software-properties-common sudo add-apt-repository ppa:gluster/glusterfs-3.5 sudo apt-get update

Configuring the Servers

Here we will go ahead and configure the GlusterFS servers. Let’s first install the GlusterFS server package.

sudo apt-get install glusterfs-server

Once these packages are installed, we will establish connectivity and trusts between the 2 hosts.

gluster@gluster01:~$ sudo gluster peer probe gluster02 peer probe: success.

Now that both nodes (these are also known as bricks) are trusted, we can begin creating our Storage Volume. As we are using GlusterFS to create a redundant volume for our web servers, we will be using the replica option, not stripe. Here we specify the volume name, the number of servers we are mirroring to, our transport method which is TCP and the paths which the volume will be stored.

gluster@gluster01:~$ sudo gluster volume create webdata replica 2 transport tcp gluster01:/webdata gluster02:/webdata force volume create: webdata: success: please start the volume to access data

All that is left is to start our new volume.

gluster@gluster01:~$ sudo gluster volume start webdata volume start: webdata: success

Configuring the Clients

First we again, simply install our packages.

sudo apt-get install glusterfs-client

Now I am going to create a directory to mount the GlusterFS Volume to and I am going to add an entry into fstab to allow this directory to always mount.

webadmin@websrv02:~$ sudo mkdir /webdata

webadmin@websrv02:~$ sudo vi /etc/fstab

#Add the following entry

gluster01:/webdata /webdata glusterfs defaults,_netdev 0 0

webadmin@websrv02:~$ sudo mount -a

webadmin@websrv02:~$ df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/mapper/websrv02--vg-root ext4 14G 1.8G 11G 15% /

none tmpfs 4.0K 0 4.0K 0% /sys/fs/cgroup

udev devtmpfs 486M 4.0K 486M 1% /dev

tmpfs tmpfs 100M 408K 99M 1% /run

none tmpfs 5.0M 0 5.0M 0% /run/lock

none tmpfs 497M 0 497M 0% /run/shm

none tmpfs 100M 0 100M 0% /run/user

/dev/sda1 ext2 236M 68M 156M 31% /boot

gluster01:/webdata fuse.glusterfs 47G 1.6G 43G 4% /webdata

Take note that it does not matter which host you point the mount to. I will add gluster02 to websrv01.

webadmin@websrv01:~$ df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/mapper/websrv01--vg-root ext4 14G 1.9G 11G 15% /

none tmpfs 4.0K 0 4.0K 0% /sys/fs/cgroup

udev devtmpfs 486M 4.0K 486M 1% /dev

tmpfs tmpfs 100M 408K 99M 1% /run

none tmpfs 5.0M 0 5.0M 0% /run/lock

none tmpfs 497M 0 497M 0% /run/shm

none tmpfs 100M 0 100M 0% /run/user

/dev/sda1 ext2 236M 68M 156M 31% /boot

gluster02:/webdata fuse.glusterfs 47G 1.6G 43G 4% /webdata

Since the Hash Algorithm is sent to the client, in the event we lose Gluster02 our client will reach out to Gluster01 for the file, regardless of the mount. I will add some test data in websrv01 and see if we can see those files on websrv02.

#These permission changes are bad practice, more just to demonstrate.

webadmin@websrv01:/webdata$ sudo chmod 775 /webdata/

webadmin@websrv01:/webdata$ sudo chown root.webadmin /webdata/

webadmin@websrv01:/webdata$ touch data{1..40}

webadmin@websrv01:/webdata$ ls

data1 data11 data13 data15 data17 data19 data20 data22 data24 data26 data28 data3 data31 data33 data35 data37 data39 data40 data6 data8

data10 data12 data14 data16 data18 data2 data21 data23 data25 data27 data29 data30 data32 data34 data36 data38 data4 data5 data7 data9

webadmin@websrv02:~$ cd /webdata/

webadmin@websrv02:/webdata$ ls

data1 data11 data13 data15 data17 data19 data20 data22 data24 data26 data28 data3 data31 data33 data35 data37 data39 data40 data6 data8

data10 data12 data14 data16 data18 data2 data21 data23 data25 data27 data29 data30 data32 data34 data36 data38 data4 data5 data7 data9

Already, in a matter of minutes we have a redundant, fast and flexible storage pool for our web servers. Now let us make sure no other GlusterFS nodes connect to this volume, as the current setup will allow for any server to connect to this volume. We only do this from 1 storage node, as this will replicate to the other. Note: Make sure you have a functional DNS or add the entries manually or use IP addresses. I ended up adding the hosts to /etc/hosts once my DNS decided to stop resolving for no reason.

gluster@gluster01:~$ sudo gluster volume set webdata auth.allow websrv01,websrv02

Now for additional information regarding the status of your GlusterFS cluster we will go into the console.

Type: Replicate Volume ID: f253dab7-8d10-49a5-aec7-da4f4f755a8d Status: Started Number of Bricks: 1 x 2 = 2 Transport-type: tcp Bricks: Brick1: gluster01:/webdata Brick2: gluster02:/webdata Options Reconfigured: auth.allow: websrv01,websrv02 gluster> peer status Number of Peers: 1 Hostname: gluster02 Uuid: ea354d6a-f7a9-4ef6-abec-3de9fa013c11 State: Peer in Cluster (Connected) gluster> volume profile webdata start Starting volume profile on webdata has been successful gluster> volume profile webdata info Brick: gluster01:/webdata ------------------------- Cumulative Stats: %-latency Avg-latency Min-Latency Max-Latency No. of calls Fop --------- ----------- ----------- ----------- ------------ ---- 0.00 0.00 us 0.00 us 0.00 us 40 RELEASE 0.00 0.00 us 0.00 us 0.00 us 55 RELEASEDIR Duration: 2100 seconds Data Read: 0 bytes Data Written: 0 bytes Interval 0 Stats: %-latency Avg-latency Min-Latency Max-Latency No. of calls Fop --------- ----------- ----------- ----------- ------------ ---- 0.00 0.00 us 0.00 us 0.00 us 40 RELEASE 0.00 0.00 us 0.00 us 0.00 us 55 RELEASEDIR Duration: 2100 seconds Data Read: 0 bytes Data Written: 0 bytes

Gluster attempted to keep it as simple as possible, and I would be hard pressed to find another solution that makes it this simple. Now, playing devil’s advocate, let’s say we need to account for added traffic, or we have added a new database to this filesystem. We need to quickly add another node to the cluster to support our new load. We quickly spin up a new VM, install GlusterFS Server package and begin to scale the cluster.

Here we connect and trust our new node (brick).

gluster@gluster01:~$ sudo gluster peer probe gluster03 peer probe: success.

From here we simply add the node to the volume we already have created.

gluster@gluster01:~$ sudo gluster volume add-brick webdata replica 3 gluster03:/webdata force volume add-brick: success

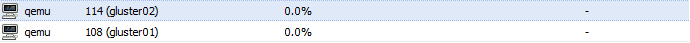

The Gluster volume status command will in most cases be the go to troubleshooting command in most case, here we can see the data is replicated and the port we are open and connected on.

root@gluster03:/webdata# ls data1 data11 data13 data15 data17 data19 data20 data22 data24 data26 data28 data3 data31 data33 data35 data37 data39 data40 data6 data8 data10 data12 data14 data16 data18 data2 data21 data23 data25 data27 data29 data30 data32 data34 data36 data38 data4 data5 data7 data9 gluster@gluster01:~$ sudo gluster volume status Status of volume: webdata Gluster process Port Online Pid ------------------------------------------------------------------------------ Brick gluster01:/webdata 49152 Y 2773 Brick gluster02:/webdata 49152 Y 2713 Brick gluster03:/webdata 49152 Y 2624 NFS Server on localhost 2049 Y 3041 Self-heal Daemon on localhost N/A Y 3048 NFS Server on gluster02 2049 Y 2756 Self-heal Daemon on gluster02 N/A Y 2763 NFS Server on gluster03 2049 Y 2636 Self-heal Daemon on gluster03

Our new node is added to the cluster and our filesystems are mirrored. This shows how easily we are able to scale. I took down both gluster01 and gluster02 just to prove redundancy.

root@websrv02:/webdata# touch newfile root@gluster03:/webdata# ls -ltr newfile -rw-r--r-- 2 root root 0 Oct 21 22:34 newfile

With Gluster communicating over TCP we can create a Disaster Recovery solution just as simply as we did here. We are able to move private clouds to public clouds or create redundancy between AWS sites.

Final Thoughts:

It’s fun to see the next generation filesystems in action. The ability to stand up this type of solution on commodity hardware is a big plus for startups or those looking to cut costs. We are already seeing GlusterFS in major companies like Pandora, which run solely a GlusterFS environment.

Figures I last saw as far as workload were 75 million users listening to 13 million audio files scaling to petabytes with a network throughput of 50GB/s. Other companies reports running GlusterFS environments into the double digit petabytes. Currently these solutions I believe are mostly focuses toward Cloud and Business continuity applications. As it stands GlusterFS is battling against Ceph (Canonical/Inktank) in the Hash Algorithm Distributed filesystem department. Time will tell who will make it out on top as the fight to deeply integrate with OpenStack continues. Also it seems that these types of filesystems are impacted by Network and I/O latency on small file performance.

In a future blog article I will cover load balancing our apache servers and wrap up a completely redundant small web application.